BERT vs GPT: Architectures, Use Cases, Limits

Understand BERT vs GPT: pretraining objectives, generative ability, strengths, weaknesses, and when to choose each for NLP workloads.

bert vs gpt

People comparing BERT vs GPT usually look for a simple winner, but the real value comes from understanding how each model fits into a specific workflow. Teams building chat assistants, internal knowledge tools, recommendation engines, or search pipelines need clarity on whether a model should generate fluent text or extract structured meaning from existing language. That choice affects everything from hosting costs to latency to how well a product behaves once real users start relying on it.

BERT entered the scene through Google’s research labs as a way to improve language understanding. It focuses on reading a sentence as a whole, absorbing context from all sides, and representing meaning in a dense, machine-friendly format. GPT took the opposite route in practice: trained to keep writing based on previous tokens, it became useful for conversation, storytelling, role-play agents, and general text synthesis.

Today, companies don’t choose between abstract research papers; they choose a tool that matches a task—semantic search, chatbots, moderation pipelines, classification, summarization, or domain-specific assistants. This article breaks down architectures, training patterns, strengths, limits, and practical use cases so product teams and founders can choose what to build next and understand how a custom solution can be developed with Scrile AI.

Two Models, Two Directions

BERT appeared in 2018 as a research release from Google, published with open checkpoints that developers could fine-tune on laptops without massive infrastructure. It focused on language understanding rather than generating text, which made it appealing for search, classification, tagging, and systems that needed structure over fluency. GPT came earlier in smaller forms, but the series gained momentum when OpenAI scaled training data and parameters year after year, turning a predictive decoder into a full conversational engine. The two models share the Transformer blueprint, yet they evolved along different paths because their creators chased different goals.

The difference between BERT and GPT starts with how each handles context. BERT reads the full sentence at once to understand relationships between words, while GPT predicts the next token based only on previous tokens. When people compare GPT vs BERT, they’re usually deciding between a system that extracts meaning and a system that writes something new. This divide shaped modern tools: semantic search and ranking pipelines rely on BERT-style embeddings, while chat interfaces lean toward generative transformers.

Why Bidirectionality Matters for Understanding

BERT hides parts of a sentence during training and learns to fill them in. That process, known as masked language modeling, pushes the model to form a conceptual map of the text rather than predict long coherent passages. It can produce text—so answering is BERT a generative model? depends on how loosely the term is used—but its architecture was never optimized for extended narratives. GPT moves through text one token at a time, treating everything as a continuation task, which fits the needs of assistants, role-play agents, and content tools.

Where BERT tends to shine:

- classification, labeling, and entity extraction

- search embeddings and document clustering

- moderation pipelines where precision matters

Where GPT shows practical advantages:

- conversational agents and story-driven apps

- content synthesis, rewriting, expansion

- dynamic assistants that must respond with full sentences

Architecture and Training Philosophy

Both models rely on the Transformer framework, but they use different halves of it. BERT runs on an encoder stack that turns text into dense, context-rich representations. GPT builds on decoder stacks designed to extend text sequence by sequence. This architectural split influences how each model handles ambiguity, memory, latency, and scaling. When teams evaluate BERT vs GPT architecture, they’re deciding between a tool that interprets language and a tool that continues it.

Encoders process all tokens at once, letting the model consider entire sentences during training. Decoders generate text token by token, processing earlier output as part of the input stream. These mechanics lead to different training objectives, evaluation metrics, and even infrastructure choices. For example, encoder workloads often benefit from batch processing and multi-document pipelines, while generative workloads usually emphasize streaming output and fast token sampling.

Context windows also matter. Earlier GPT generations had relatively limited context, which constrained long conversations. Larger models expanded those windows to tens or hundreds of thousands of tokens. BERT processes full context within a fixed window, but because it doesn’t generate text sequentially, its context is more about representation than narrative length. Training data differs as well: GPT models typically consume vast corpora of web text, code, and dialogue; BERT models often start from curated sources like Wikipedia or BooksCorpus before further domain tuning.

Families of each model:

- BERT variations: BERT-Base, BERT-Large, RoBERTa, DistilBERT

- GPT variations: GPT-2, GPT-3, GPT-3.5, GPT-4, GPT-4o, GPT-5, GPT-5.1

- Middle-ground examples: T5 reframes tasks as text-to-text, bridging understanding and generation

How BERT Learns: Masking and Next-Sentence Predictions

BERT hides random tokens, then reconstructs them, learning relational meaning rather than linear continuation. It also learns whether two segments belong together, which helps question answering, summarization, and search ranking. This type of training builds strong embeddings for downstream models.

Key strengths of BERT-like architectures:

- precision in classification tasks

- better performance in entity recognition

- stable fine-tuning for narrow domains

How GPT Learns: Predictive Decoding and Tokens as Continuity

GPT predicts each token based on previously seen tokens. It treats every task as continuation, so summarization, chat, and code generation all follow the same logic. This method makes generation flexible yet computationally heavier at runtime.

Key strengths of GPT-style architectures:

- fluid conversational output

- creative rewriting and synthesis

- dynamic experiences for agents and role-play bots

Comparison Table

| Feature | BERT (Encoder) | GPT (Decoder) |

| Training objective | Masked language modeling, NSP | Autoregressive next-token prediction |

| Directionality | Bidirectional | Unidirectional |

| Strengths | Classification, QA, embeddings | Generation, agents, conversation |

| Weak spots | Long-form creative output | Precise contextual reasoning |

This architectural contrast shapes practical choices. Some products need structured insight; others need expressive language. The difference between GPT and BERT becomes visible in how each model behaves once deployed.

What Each Model Does Best

Companies apply language models to real workflows rather than abstract benchmarks. BERT often becomes part of systems that need structured signals: fraud checks, contract analysis, moderation queues, or dashboards that highlight key terms from large text streams. Its outputs behave like coordinates rather than sentences, which helps engineers plug them into ranking algorithms, retrieval layers, and analytics tools.

GPT behaves more like a coworker sitting inside the interface. It drafts onboarding messages, rewrites inputs into cleaner instructions, adapts tone for different users, and powers conversational agents. That’s useful when the end goal involves interaction, not silent background processing. The overlap between GPT BERT shows up in products that need both parts: an encoder sorts and interprets, a decoder delivers a response the user actually reads.

When a Classification Pipeline Needs BERT’s Context Awareness

- labeling customer messages to route them to the right workflow

- internal search that surfaces documents based on meaning, not matching strings

- tools that summarize long archives into structured notes for analysts

When User-Facing Products Need GPT’s Free-Form Generation

- chat-based onboarding for apps that replace menus with dialogue

- interactive AI characters or role-based companions

- editors that rewrite text more naturally than template-driven scripts

Choose BERT:

You need signals, clusters, or indices that feed another part of the system rather than raw text.

Choose GPT:

You’re shipping something users talk to—answers, drafts, guidance, reactions.

Limitations and Practical Constraints

Real deployments expose trade-offs that aren’t obvious in demos. In BERT vs GPT comparisons, the deciding factor is often how the model behaves when thousands of requests hit production systems, not how well it performs in isolated benchmarks.

Key constraints to plan around:

- Autoregressive generation introduces noticeable latency and drives up GPU time per user session. Every token must be produced in order, which makes long conversations expensive to serve. Even with caching, large-scale messaging apps or AI companions require distributed inference rather than a single powerful node.

- Fine-tuning paths differ, affecting development budgets. BERT adapts to new domains with relatively small datasets and shorter training cycles, so teams can iterate quickly. GPT often needs instruction-style datasets and multi-stage tuning to behave predictably, and costs scale with both batch size and sequence length.

- Context handling shapes architecture decisions. GPT can keep long histories available, but storing and passing that context consumes memory and slows inference. BERT processes fixed-length chunks, so long documents require chunking or retrieval layers that map text to embeddings before analysis.

- Reliability issues surface in real-world agents. GPT generates confident but incorrect statements without grounding, forcing teams to add retrieval, validation, or policy filters. BERT avoids improvisation but cannot act as a standalone interface, which means extra components to produce user-facing text.

Future of NLP: Hybrid Models and Task-First Design

The next wave of language systems builds less around single-model dominance and more around mixing components that handle different parts of a workflow. In many setups, BERT vs GPT doesn’t look like a debate but a pipeline: an encoder filters or ranks information, then a decoder turns that context into readable output. This separation keeps generation flexible while maintaining structure where accuracy matters.

Instruction-tuned encoders are becoming more common. They adapt BERT-style architectures to follow task-level prompts, which helps when products need deterministic behavior instead of creative improvisation. On the generative side, models keep growing context windows and memory layers, making them better at long-running conversations and role-based assistants that learn from previous interactions.

A noticeable trend is fragmentation into smaller, specialized models instead of relying on one giant LLM. Startups fine-tune compact architectures on medical notes, legal contracts, or customer data so they can run fast, privately, and without paying for full-scale inference every time a user types a sentence. Some teams mix retrieval layers, embeddings, rules, and lightweight generators to reduce cost without sacrificing output quality.

Personalization matters too. Systems are shifting toward persistent profiles, preference tracking, and short-term memory, turning assistants into evolving agents rather than static chatbots.

Turn Language Models Into Working Systems with Scrile AI

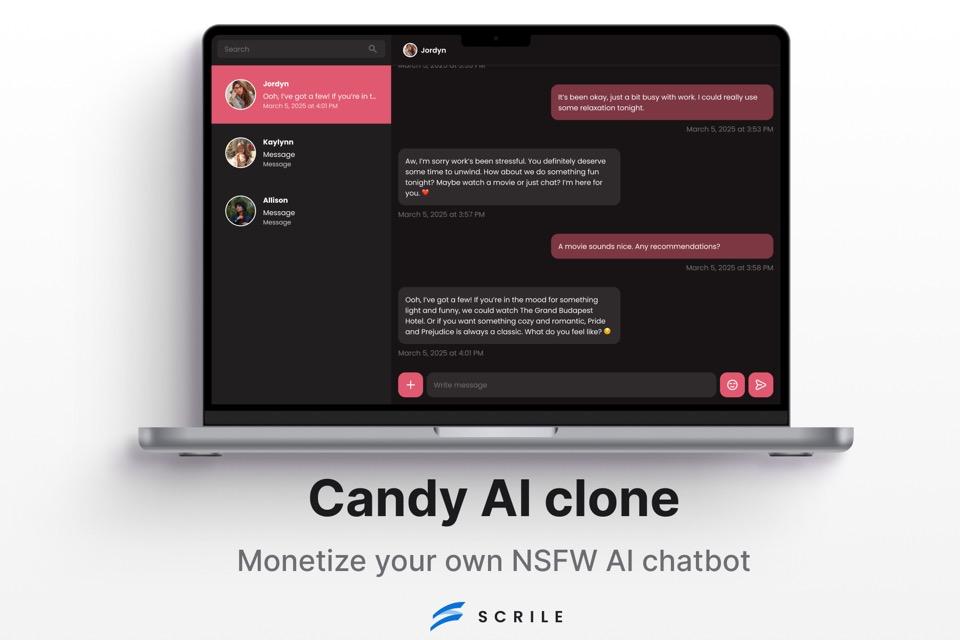

Most companies don’t want to choose between BERT-style understanding and GPT-style generation—they want both working together inside a product that fits their market. In BERT vs GPT comparisons, the real question becomes how fast a business can turn these models into something people actually use: a support agent that knows company policies, a role-play companion with memory, or a multilingual bot trained on internal documents. Scrile AI steps in as a development service that builds these systems to spec rather than selling a fixed SaaS platform.

Scrile AI supports projects that rely on encoder-based pipelines, generative models, or hybrid stacks. Instead of forcing teams to adopt a single tech stack, it adapts architecture to the product’s needs: private deployments for companies that care about data control, domain-trained models for niche industries, or multicomponent agents that blend embeddings, retrieval, and generation. The result is not just a chatbot—it’s a custom LLM that matches the tone, workflow, and constraints of a specific business.

What Scrile AI helps build:

- Custom language models hosted privately, trained on corporate knowledge, without sending data to public endpoints

- AI companions, role-play agents, and chat-first user interfaces, powered by generative pipelines and dynamic memory

- Internal tools for search, triage, and classification, where encoder models enhance retrieval and tagging rather than produce text

Ownership is a major part of the offer. Instead of renting tokens from external APIs, teams can deploy models on dedicated infrastructure with full control over data flows, latency, and retention. For companies handling sensitive datasets—customer support logs, medical notes, or multilingual archives—this setup ensures compliance while still enabling natural interaction.

Scrile AI can architect systems that behave like GPT, interpret text like BERT, or combine both into one pipeline. That means you’re not picking a model—you’re designing an AI product with the right building blocks.

Conclusion

Choosing BERT or GPT is less about hype and more about what a product needs to deliver. Workflows grounded in interpreting text—ranking search results, sorting messages, detecting intent—benefit from BERT-style models. Tools built around conversations, assistants, and fluid responses lean toward GPT-style approaches. The real edge appears when teams combine them instead of treating BERT vs GPT as a binary choice.

If you’re planning to build an AI product, reach out to the Scrile AI team and collaborate on a custom model designed for your domain.

FAQ

Is BERT better than GPT?

It depends on the task. BERT handles structured interpretation well, such as tagging, classification, and extracting meaning from documents. GPT performs better in generative scenarios where the output must read like a natural message or narrative.

Is ChatGPT using BERT?

Both rely on Transformer designs, but they diverge in architecture. ChatGPT operates on generative decoder stacks, while BERT uses encoders built for understanding rather than conversation.

Is BERT an LLM?

Yes. BERT was trained on large text corpora and qualifies as a large language model, though its architecture is optimized for analysis instead of free-form text generation.